This article is part of the Communication and System Design Series: Have SmartNIC - Will Compute

We've jumped many chasms over the past three decades of server-based computing. In the 1990s, we went from single-socket standalone servers to clusters. Then with the millennium, we first saw dual-socket and later multicore processors. As we turned another decade, GPUs went far beyond graphics, and we witnessed the emergence of field-programmable gate-array (FPGA)-based accelerator cards.

As we're well into 2020, SmartNICs (network interface cards), also known as DPUs (data processing units), are coming into fashion. They boast huge FPGAs, multicore Arm clusters, or even a blend of both, with each of these advances bringing significant gains in solution performance. From trading stocks to genomic sequencing, computing is about getting answers faster. Under the covers, the roadway has been PCI Express (PCIe), which has undergone significant changes but is mainly taken for granted.

PCIe Evolution

Peripheral Component Interconnect Express, or PCIe, first appeared in 2003 and, coincidentally, arrived just as networking was getting ready to start the jump from Gigabit Ethernet (GbE) as the primary interconnect. At this point, high-performance computing (HPC) networks like Myrinet and Infiniband had just pushed beyond GbE with data rates of 2 Gb/s and 8 Gb/s, respectively. Shortly after that, well-performing 10-GbE NICs (network interface controllers) emerged onto the scene. They could move nearly 1.25 GB/s in each direction, so the eight-lane (x8) PCIe bus couldn't have been timelier.

The first-generation PCIe x8 bus was 2 GB in each direction. Back then, 16-lane (x16) slots were unheard of, and server motherboards often had only a few x8 slots and several x4 slots. To save a buck, some server vendors even used x8 connectors, but only wired them for x4—boy, that was fun.

Like me, most people, OK nerdy architects, know that with every PCIe generation, the speed has doubled. Today, a fourth-generation PCIe x8 slot is suitable for about 16 GB/s, so the next generation will be roughly 32 GB/sec. If that's all the fifth generation of PCIe brought, that would be fine. However, it also comes with an Aladdin's Lamp filled with promise in the form of two new protocols, Compute Express Link (CXL) and a Cache Coherent Interconnect for Accelerators (CCIX) to create efficient communication between CPUs and accelerators like SmartNIC or co-processors.

Compute Express Link

Let's start with CXL. It provides a well-defined master-slave model where the CPU's root complex can share both cache and main system memory over a high-bandwidth link with an accelerator card (Fig. 1).

This enables the host CPU to efficiently dispatch work to the accelerator and receive the product of that work. Some of these accelerators have sizable high-performance local memories using DRAM or high-bandwidth memory (HBM). With CXL, these high-performance memories can now be shared with the host CPU, making it easier to operate on datasets in shared memory.

Furthermore, for atomic transactions, CXL can share cache memory between the host CPU and the accelerator card. CXL goes a long way toward improving host communications with accelerators, but it doesn't address accelerator-to-accelerator communications on the PCIe bus.

In 2018, the Linux kernel finally rolled in code to support PCIe peer-to-peer (P2P) mode. This made it easier for one device on the PCIe bus to share data with another. While P2P existed before this kernel update, it required some serious magic to work, often requiring that you had programmatic control over both peer devices. With the kernel change, it’s now relatively straightforward for an accelerator to talk with PCIe/NVMe memory on the PCIe bus or another accelerator.

As solutions become more complex, simple P2P will not be enough, and it will constrain solution performance. Today, we have persistent memory sitting in DIMM sockets, NVMe storage, and smart storage (SmartSSD) plugged directly on the PCIe bus, along with a variety of accelerator cards and SmartNICs or DPUs, some with vast memories of their own. As these devices are pressed to communicate with one another, our expensive server processors will become costly traffic lights bottlenecking enormous data flows. This is where CCIX comes in—it provides a context for establishing peer-to-peer relationships between devices on the PCIe bus.

Cache Coherent Interconnect for Accelerators

Some view CCIX as a competing standard to CXL, but it’s not. CCIX’s approach to peer-to-peer connections on the bus is what makes it significantly different (Fig. 2). Furthermore, it can take memory from different devices, each with varying performance characteristics, pool it together, and map it into a single non-uniform memory access (NUMA) architecture. Then it establishes a Virtual Address space, enabling all of the devices in this pool access to the full range of NUMA memory. This goes far beyond the simple memory-to-memory copy of PCIe P2P, or the master-slave model put forth by CXL.

As a concept, NUMA has been around since the early 1990s, so it’s extremely well understood. Building on this, today, most servers easily scale to a terabyte (TB) or more expensive DRAM memory. In addition, drivers exist that can map a new type of memory called persistent memory (PMEM), also known as storage class memory (SCM), alongside real memory to create “huge memory.” With PCIe 5 and CCIX, this should further enable system architects to extend this concept using SmartSSDs.

Computational Storage

SmartSSDs, also known as computational storage, place a computing device, often an FPGA accelerator, alongside the storage controller within a solid-state drive, or embed a compute function inside the controller. This enables the computing device in the SmartSSD to operate on data as it enters and exits the drive, potentially redefining both how data is accessed and stored.

Initially, SmartSSDs are viewed as block devices, but with the appropriate future driver installed in the FPGA, they could be made to look like byte-addressable storage. Today, SmartSSDs are produced with multiple terabytes of capacity, but capacities will explode. Therefore, SmartSSDs could be used to extend the concept of huge memory, only via NUMA, so that both the host CPU and accelerator applications can access many terabytes of memory, across numerous devices, without rewriting the applications using this memory. Furthermore, SmartSSDs can provide better TCO solutions by enabling in-line compression and encryption.

Enter SmartNICs

How exactly does a SmartNICs fit into this architecture? SmartNICs are a special class of accelerators that sit at the nexus between the PCIe bus and the external network. While SmartSSDs place computing close to data, SmartNICs place computing close to the network. Why is this important? Simply put, server applications rarely concern themselves with network latency, congestion, packet loss, protocols, encryption, overlay networks, or security policies.

To address these issues, lower-latency protocols like QUIC were created to improve latency, reduce congestion, and recover from packet loss. We’ve crafted TLS and extended that with kernel TLS (kTLS) to provide encryption and secure data in-flight. We’re now seeing kTLS being added as an offload capability for SmartNICs.

To support the orchestration of virtual machines (VMs) and containers, we created overlay networks. This was followed by technologies like Open vSwitch (OvS) to define and manage them. SmartNICs are starting to offload OvS.

Finally, we have security, which is managed through policies. These policies are expected to be reflected in the orchestration framework in forms like Calico and Tigera. Soon these policies will also be offloaded into SmartNICs using programming match-action frameworks like P4. All of these are tasks that should be offloaded into these specialized accelerators called SmartNICs.

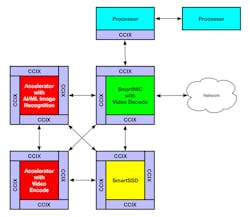

With CCIX, an architect would then be able to build a solution where multiple accelerators can directly access real memory and the storage inside SmartSSDs as one huge-memory space with a single virtual address space. For example, a solution could be constructed using four different accelerators (Fig. 3).

The SmartNIC could have a video decoder loaded into it so that as video arrives from cameras, it can be converted back into uncompressed frames and stored in a shared framebuffer within the NUMA virtual address space. As these frames become available, a second accelerator running an artificial-intelligence (AI) image-recognition application can scan these frames for faces or license plates. In parallel, a third accelerator could be transcoding these frames for display and long-term storage. Finally, a fourth application running on the SmartSSD removes the frames from the frame buffer once the AI and transcoding tasks are successfully completed. Here we have four very specialized accelerators collaboratively working together to become known as a “SmartWorld” application.

The industry started adding more cores to offset the issues around Moore's Law. Now there’s an abundant number of cores, but not enough bandwidth to the CPU from the external devices such as NICs, storage, and accelerators. PCIe Gen5 is our next hop, and it enables a much bigger conduit to open up high-performance computing on CPU.

For example, a typical CPU core can handle 1 Gb/s+, but if you have dual 128-core CPUs, then PCIe Gen4x16 isn’t enough. Cache coherency provided by the CXL and CCIX protocols offers tremendous benefits for applications demanding tight interaction between CPU cores and accelerators. Primary application workloads such as databases, security, and multimedia are now starting to leverage these advantages.

Orchestration

The final piece of the puzzle is orchestration. This is the capability whereby frameworks like Kubernetes can automatically discover and manage accelerated hardware and mark it in the orchestration database as online and available. It will then need to know if this hardware supports one or more of the protocols mentioned above. Subsequently, as requests for new solution instances come in and are dynamically spun up, those containers instances that are aware of, and are accelerated by, these advanced protocols can use this hardware.

Xilinx has developed the Xilinx Resource Manager (XRM) to work with Kubernetes and manage multiple FPGA resources in a pool, thereby improving overall accelerator utilization. The result is that newly launched application instances can be automatically dispatched to execute on the most appropriate and performant resources within the infrastructure while remaining within the defined security policies.

SmartNICs and DPUs that leverage PCIe 5 and CXL or CCIX will offer us richly interconnected accelerators that will enable the development of complex and highly performant solutions. These SmartNICs will provide compute-intense connections to other systems within our data center or the world at large. It’s even possible to envision a future where a command comes into a Kubernetes controller, running natively on a SmartNIC resource, to spin up a container or pod. The bulk of computation for this new workload might then be occurring on an accelerator device somewhere else within that server, all without ever getting the server’s host CPU directly involved.

For this to function correctly, we’ll need additional security enhancements that go well beyond Calico and Tigera. We’ll also need new accelerator-aware security frameworks for extending security contexts, often called secure enclaves, across multiple computational platforms. This is where Confidential Computing comes in. It should provide both the architecture and an API for protecting data in-use across multiple computational platforms within a single secure enclave.

Much like a sensitive compartmented information facility (SCIF) can be an entire Department of Defense building, a secure enclave within a computer should be capable of spanning multiple computational platforms. Exciting times ahead.

Scott Schweitzer is Technology Evangelist at Xilinx.

Read mores articles from the Communication and System Design Series: Have SmartNIC - Will Compute