Download this article in PDF format.

Signal integrity is the primary measure of signal quality. Its importance increases with higher signal speed, oscilloscope bandwidth, the need to view small signals, or the need to see small changes on larger signals. Signal integrity impacts all oscilloscope measurements. Oscilloscopes themselves are subject to the signal-integrity challenges of distortion, noise, and loss.

Oscilloscopes with superior signal-integrity attributes offer better representations of your signals under test, while representations provided by those with poor signal-integrity attributes will be inferior. This difference impacts an engineer’s ability to gain insight and to understand, debug, and characterize designs.

Thus, selecting an oscilloscope that has good signal-integrity attributes is important; the alternative is increased risk in terms of development-cycle times, production quality, and the components chosen. To evaluate oscilloscope signal integrity, we will look at analog-to-digital converter (ADC) bits, vertical scaling, noise, frequency, phase response, effective number of bits (ENOB), and intrinsic jitter.

ADC Bits

Resolution is the smallest quantization (Q) level determined by the ADC in the oscilloscope. The higher the number of ADC bits, the greater the resolution. For example, an 8-bit ADC can encode an analog input to one in 256 different levels (since 28 = 256), while a 10-bit ADC ideally provides four times the resolution of that (210 = 1024 Q levels).

Vertical Scaling

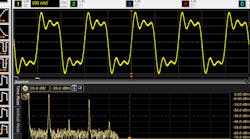

Since the ADC operates on the full-scale vertical value, proper vertical scaling also helps increase oscilloscope resolution. Figure 1 shows a full screen of 800 mV (8 divisions × 100 mV/div).

1. Resolution is an important signal-integrity attribute. More ADC bits and proper vertical scaling are two ways to increase resolution.

An oscilloscope with an 8-bit ADC has a resolution of 3.125 mV (800 mV/256 Q levels), while a 10-bit ADC has a resolution of 0.781 mV. Each oscilloscope can only resolve signals down to the smallest Q level.

To get the best resolution, use the most sensitive vertical-scaling setting while keeping the full waveform on the display. Scaling the waveform to consume almost the full vertical display makes full use of your oscilloscope’s ADC. If a signal is scaled to take up only half or less of the vertical display, you will lose one or more ADC bits.

The combination of the ADC, the oscilloscope’s front-end architecture, and the probe used determine the limit of vertical scaling supported by the oscilloscope hardware. At a certain point, each family of oscilloscopes cannot go to a lower vertical scale. Vendors will often refer to this as the point where the oscilloscope moves into software magnification. Turning the oscilloscope’s vertical scale to a smaller number simply magnifies the displayed signal but doesn’t result in any additional resolution.

Figure 2 gives an example of two oscilloscopes evaluating a small signal. The signal’s magnitude is such that a vertical scaling of 16 mV full screen allows the signal to consume almost the entire vertical display height. The traditional 8-bit oscilloscope goes into software magnification at 7 mV/div, resulting in a minimum resolution of 218 μV (7 mV/div × 8 div/256 Q levels). A 10-bit oscilloscope, such as the Keysight Infiniium S-Series, stays in hardware all the way down to 2 mV/div, giving a minimum resolution of 15.6 µV (2 mV/div × 8 div/1024 Q levels)—14 times the resolution of the 8-bit oscilloscope.

2. The minimal vertical setting an oscilloscope supports in hardware will be important for seeing small signal detail.

Noise

Noise impacts horizontal as well as vertical measurements. The lower the noise, the better signal integrity you can expect. If noise levels are higher than ADC quantization levels, you won’t be able to take advantage of the additional ADC bits. Having an oscilloscope with low noise (high dynamic range) is critical if you want to see small currents and voltages, or small changes on larger signals.

Noise can come from a variety of sources, including the oscilloscope’s front end, its ADC, and the probe or cable used to connect the device. The ADC itself has quantization noise, but this typically plays a lesser role in overall noise contribution than the front end.

Most oscilloscope vendors will characterize noise and include these values on their product datasheet. If not, you can ask for the data, or find out yourself. The measurement is easy and takes only a few minutes. Each oscilloscope channel will have unique noise qualities at each vertical setting. Disconnect all inputs from the front of the oscilloscope and set it to the 50-Ω input path (you can also run the test for the 1-MΩ path).

Turn on a decent amount of acquisition memory, such as 1 Mpoint, with the sample rate fixed at a high sample rate to ensure you are getting the full oscilloscope bandwidth. You can view the noise visually by looking at wave-shape thickness, or quantify it by measuring Vrms ac. By using these methods, you will know the amount of noise each oscilloscope channel has at each vertical setting.

Frequency Response

Uniform and flat oscilloscope frequency response is highly desired for signal integrity. Each oscilloscope model will have a unique frequency response that’s a quantitative measure of the oscilloscope’s ability to accurately acquire signals up to the rated bandwidth. Three oscilloscope must-have requirements to accurately acquire waveforms are:

- A flat frequency response.

- A flat phase response.

- Captured signals must be within the bandwidth of the oscilloscope.

A flat frequency response indicates that the oscilloscope is treating all frequencies equally. A flat phase response means the signal is delayed by precisely the same amount of time at all frequencies. Deviation from one or more of these requirements will cause an oscilloscope to inaccurately acquire and draw a waveform.

Some scopes have correction filters that are typically implemented in hardware digital-signal-processing (DSP) blocks and tuned for a family of oscilloscopes. Figure 3 shows how correction filters can improve signal integrity of the measurement by creating a flat magnitude and phase response. The oscilloscope on the right shows a waveform that accurately matches the spectral content of the signal, while the one on the left does not.

3. Two oscilloscopes having identical bandwidth rating, sample rate, and other settings were connected to an identical signal. The difference between the two is the one on the right uses hardware DSP correction filters to produce a flat magnitude and phase response, while the one on the left does not.

Your oscilloscope’s overall frequency response will be a combination of the oscilloscope’s frequency response and the frequency response of any probes or cables connected between the device under test (DUT) and the instrument. If you put a BNC cable that has 1.5-GHz bandwidth on the front of a 4-GHz oscilloscope, the system’s overall bandwidth is limited by the BNC cable and not the oscilloscope. Make sure your probes, accessories, and cables aren’t the limiting factor for a precision measurement.

Effective Number of Bits (ENOB)

ENOB is a measure of the oscilloscope’s dynamic performance expressed in a series of bits-vs.-frequency curves. Each curve is created at a specific vertical setting while frequency is varied. The resulting voltage measurements are captured and evaluated. In general, a higher ENOB (expressed in bits) is better.

While some vendors may provide the ENOB value of the oscilloscope’s ADC separately, this figure has no value. ENOB of the entire system is what’s important. The ADC could have a great ENOB, but poor oscilloscope front-end noise would dramatically lower the ENOB of the entire system. Engineers who look exclusively at ENOB to gauge signal integrity should be cautioned. ENOB does not consider offset errors or phase distortion that the oscilloscope may inject.

An oscilloscope doesn’t just have one ENOB number. Rather it has different ENOB values for each vertical setting and frequency.

Intrinsic Jitter

Jitter describes deviation from the ideal horizontal position. It’s measured in ps rms or ps peak-to-peak. Jitter sources include thermal and random mechanical noise from crystal vibration. Traces, cables, and connectors can further add jitter to a system through intersymbol interference.

Oscilloscopes themselves have jitter. The term “jitter measurement floor” refers to the jitter value reported by the oscilloscope when it measures a perfect jitter-free signal. The jitter-measurement-floor value is comprised not only of the sample clock jitter, but also of vertical error sources, such as vertical noise and aliased signal harmonics. These vertical error sources affect horizontal time measurements because they change the signal of threshold crossings.

Excessive jitter is bad, as it can cause timing violations that result in incorrect system behavior or poor bit error rates (BER) in communication systems that result in incorrect transmissions. Measurement of jitter is necessary to ensure high-speed system reliability. Understanding how well your oscilloscope will make those measurements is critical to interpreting your jitter measurement results (Fig. 4).

4. Keysight’s Infiniium S-Series oscilloscopes include a new time-base technology block. Its clock accuracy is 75 parts per billion. Intrinsic jitter for short record lengths is less than 130 fs.

Summary

While each attribute is important, the most overall accuracy will be seen in the oscilloscope that has a better overall composite of the seven attributes as listed in the table. Looking at only a single signal-integrity attribute in the absence of others can lead to false conclusions of the quality of your oscilloscope, which can then lead to unnecessary risk in getting your products to market or meeting product performance.

These seven signal-integrity attributes should be considered when choosing an oscilloscope.

For more detailed information, please download the Keysight application note.