Many neural-network designers find they’re both pediatricians and geriatricians. Pediatricians because the health of neural networks taking their first steps in the world is in their hands. Geriatricians because even at the start of their network’s life, they’re concerned with how the network will evolve or age as it integrates with all of the elements encompassed by AI at the edge.

This article discusses how silo-ing and other problems, caused in part by the lack of appropriate tools, affect the challenges of bringing machine learning to the very edge of the IoT. It will also look at how designers and developers can use new techniques and tools to address these problems and build connections.

Disconnects to Solve

Devices able to perform inference at the edge are increasingly agents of practical solutions. Think about people-counting in a retail location to compare those browsing in the store to those who buy. Or touchless airport check-in. From these and many other edge AI applications come the practical benefits of improved data security, reduced latency, and lower storage costs, among others. Initiatives like TensorFlow Lite for Microcontrollers and TinyML help make such advantages realizable.

However, as the pace of bringing AI to the embedded edge picks up, what’s emerged is the need to get the network to run on “real” hardware. And the need to add AI specifically to embedded devices. Meeting these demands continues to be challenging for several reasons.

Having to use what’s been available, in some cases frameworks only intended for hobbyists or for anything beyond the experimentation stage, is one problem. Such frameworks lack the features needed to design production-grade embedded software. These features include reliably starting a network stack to communicate to the cloud, initiating Wi-Fi connectivity, and provisioning IoT devices with industry-grade security—just some of the components needed to build a commercial application.

Of course, frameworks whose features put them at the commercial, rather than hobby, level have been available, too. These are well-developed and well-defined for Linux and Android operating systems. Using these frameworks when the target is embedded devices, which utilize real-time operating systems (RTOS), has taken some extra effort and time. For instance, the limited memory and performance of an RTOS-based embedded system as compared to a rich OS like Android requires more care in how the neural-network designer integrates everything.

Besides the disconnect just noted, there’s another issue: When addressing Linux and Android needs, development takes place on the intended platform itself. But that development assumption is at odds with embedded systems practice. Embedded developers develop on a host machine and then cross-compile to run on the target device.

Another concern is that the overall framework, if not originally intended for embedded-device development, would not take into account using a graphical user interface, working within an integrated development environment (IDE), or using embedded debugging tools such as tracing and trace analyzers.

What all of these issues add up to are a degree of impracticality during the development process that’s been impeding the emergence of neural networks from silos (Fig. 1).

Enabling the Success of Machine-Learning-at-the-Edge Application

Machine learning at the edge has reached a point where it’s no longer enough to focus solely on the neural network’s first steps in the world—the deployment phase is here. How networks mature and become part of a cohesive solution in which updates and maintenance continue successfully is now the focus.

With the arrival of the deployment phase, both neural-network designers and embedded software designers seek practical ways to make developing machine-learning-at-the-edge applications less frustrating and time-consuming. Neural-network designers need the means to optimize neural networks by reducing memory size, the number of operations, and power consumption. Embedded software designers have sought ways to reduce the complexities of adding AI to embedded edge devices.

When considering a machine-learning application, product teams look for certain capabilities, such as the ability to experiment on sensor boards and evaluation boards expressly designed for their specific use cases. It helps if fast prototyping on real hardware can take place without the burden of writing any embedded code. The team will also want to know that the compiler enables neural-network designers to map and optimize their neural networks onto accelerators in the target hardware. Scalability and acceptance of commercial hardware is key as well.

The means to show that the neural network runs with the middleware on a real board indicates to the team that the solution is industry-grade. This is desired by embedded software designers because it’s no longer a complicated process to get the application over the finish line. And neural network designers want the confidence that the application’s value and its commercial viability can be successfully demonstrated to the product team.

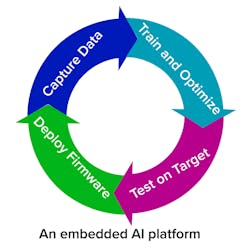

One approach to overcoming these issues is with an embedded AI platform tuned to the deployment phase (Fig. 2). Such a platform should securely acquire and store training data. It should automatically generate optimized AI models from a TensorFlow Lite model. The platform’s neural sensor processor must be able to seamlessly load AI models that include sensor interfaces. By including IoT cloud connectivity, device provisioning, and firmware upgrades based on new models or data, the platform can help assure the application’s ongoing viability.

A platform with these capabilities, for example, gives designers the flexibility to change how inferencing occurs. Or to update software components to reflect additional training that’s led to more accurate inferencing. It could involve updating the network stack, making a security fix, or adding device functionality.

Dissolving Silos

The TENSAI Flow platform developed by Eta Compute integrates elements that make machine learning at the edge more practical. Eta Compute and its partners, including Edge Impulse and the Google TensorFlow team, created a solution that includes a compiler, neural-network zoo, and middleware comprising FreeRTOS, HAL, and frameworks for sensors, as well as IoT/cloud enablement.

Taking on the problems that have created silos of neural-network design and embedded software design, TENSAI Flow bridges the two sides. Offering network optimization features matched to embedded target demands, TENSAI Flow wraps the neural-network code with middleware and firmware, directly usable by the embedded team. It enables quick prototyping and data collection on TENSAI Flow sensor boards or custom boards.

In addition, the platform makes managing multiple cores transparent. The advantage of distributing the workload among multiple cores can be realized without in-house multicore software writing tasks slowing development.

Use Case 1: Porting a Neural Network to an Edge Device

Consider the task of porting an existing neural network, known to provide the right accuracy, to an edge device. This task may be very time-consuming: The developer must optimize the neural network to fit into the necessarily limited resource of an edge device while preserving network speed and accuracy. In the worst case, it may not even converge.

Developers will first convert their neural network to a TFLITE format, then use the compiler to generate optimized code. The compiler creates the most optimized code for the device, saving an enormous amount of time while reducing the risks dramatically. The developer can then quickly move on, test on one of the TENSAI boards, and validate the neural network on real hardware.

The optimized code may not meet the memory and performance constraints of the device. This isn’t a rare case—many neural networks have been generated within academia with little consideration for real hardware, and they may use architectures unsuitable for the embedded world.

The compiler gives immediate information about the network size and an estimate of the execution time. Because the compiler generates the most optimized code possible, the developer knows very quickly if the task is impossible and can focus on more suitable networks, like those in the TENSAI Flow neural-network zoo.

Use Case 2: Achieving the Right Accuracy

A second common use case is when the developer’s neural network fits nicely in the hardware and executes quickly enough but doesn’t provide exactly the right accuracy. Not obtaining the desired accuracy can often happen, for instance, if the neural-network developer uses an object detection network like MobileNet and wants to identify a specific type of object under certain conditions. The default dataset will not be designed for this specific use case, and more training is required with a more specific, better dataset.

This situation can be remedied easily by using one of the TENSAI boards and TENSAI Flow to acquire more data. Using the Edge Impulse TinyML pipeline, which is integrated with TENSAI Flow, the developer can acquire, label, and share data in the cloud with all other developers. He can also manage data buckets, apply data transformation and augmentation, and manage dataset revisions over their lifecycle.

These use cases show the immediate benefit for the neural-network developer tasked with delivering a suitable network. An additional benefit is the platform will generate code that’s directly usable by embedded software developers—code that meets the industry standards for commercial applications. Developers thus have the assurance that their neural network can be used and deployed in a real product. Seeing the fruit of one’s labor shipped on thousands or millions of devices in the field is a very gratifying moment for any engineer.

Conclusion

In the last few years, we have seen confirmation that efficient, low-power machine learning can occur in small devices. However, disconnects in the development process and silos between the neural network and embedded world hampered commercial deployment. We are now more prepared, thanks to the introduction of new hardware and software solutions, to move onto widespread concrete deployment of AI at the edge.

Semir Haddad is Senior Director of Product Marketing at Eta Compute.