What you’ll learn

- How AI gave us ML, and, in turn, TinyML.

- How ML and TinyML are being used today.

- What may be on the horizon.

Machine learning, a form of artificial intelligence, can trace its roots back to the late 1950s when IBM employee Arthur Samuel coined the phrase while developing computer gaming (specifically Checkers) and AI.

Shortly after, in the 1960s, Raytheon designed CyberTron, a learning machine designed to analyze sonar signals, electrocardiograms, and speech patterns using an early form of reinforcement learning. Human operators trained the machine to recognize specific sound patterns, and it was even equipped with a “goof” button that, when pressed, allowed it to reevaluate wrong conclusions.

Decades later, machine learning has become an invaluable tool in a wide range of industries. This form of AI is used to power chatbots, language-translation apps, show suggestions on Netflix, social media feeds, and predictive texts. It can be found in autonomous vehicles, home-automation systems, medical applications, manufacturing, banking, and more. When companies utilize AI in businesses, chances are they’re using machine learning.

While machine learning plays a prominent role in our daily lives, it still has failed to reach certain areas. One of the causes is due to the platform requiring significant resources.

Many machine-learning models take advantage of today’s powerful hardware architectures to keep up with the demand for high-performance computing. This means most can’t be scaled to run on more limited hardware, such as mobile and IoT devices or embedded systems. They’re also expensive to train, and using high-end systems and servers for machine-learning applications can be expensive, too.

Those issues, and more, have led to the development of a subfield of machine learning that can be used with hardware-constrained devices, known as TinyML (tiny machine learning). But before we dive into what TinyML is and what it can do, let’s look at its more prominent cousin to better understand the AI.

Machine Learning

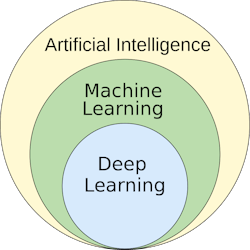

As mentioned earlier, machine learning is a subset of artificial intelligence (Fig. 1). It’s designed to allow software applications to learn from data to make accurate predictions or decisions without being explicitly programmed. Machine-learning algorithms use provided data, including photos, numbers, or text, to carry out specific tasks by “learning” in a similar fashion to humans. It will utilize that data to become more accurate in any given application.

To that end, there’s myriad machine-learning models in use today, but they]re all based on three approaches to specific learning styles:

- Supervised: These are machine-learning models trained with labeled data sets, allowing the models to learn and grow more accurately over time. For example, the model is trained using pictures of cats and other subjects, which are all labeled by humans. It uses the data to learn ways to identify cats independently without the need for additional input.

- Unsupervised: With unsupervised models, the machine-learning algorithm looks for patterns without labeled data, as it’s capable of finding patterns and trends with minimal to no input. For example, the algorithm could look through business sale data and identify different clients or consumers.

- Reinforcement: This learning model trains algorithms via trial and error and takes the best action by establishing a reward system. Reinforcement models can be used to play games or train autonomous vehicles to drive by informing them when they made the correct decisions. This helps it learn over time when those correct actions are taken.

Machine learning is best suited to applications that use lots of data or millions of examples, such as sound recordings, sensor logs, ATM transactions, and so forth. Google Translate is an excellent example of machine-learning capabilities, as it was trained using tons of data found on the web in different languages. Some of the best machine-learning algorithms today include K-means, Linear and Logistic regression, SVM, KNN, and others.

TinyML

As the name suggests, TinyML is described as a field of study within machine learning and embedded systems that explores the types of models that users can run on small, low-powered devices (Fig. 2). In other words, they’re machine-learning models designed to run on hardware and power-limited devices and embedded systems.

They enable those devices and systems to take advantage of machine learning to drive remote sensor packages to monitor and collect real-time data, monitor inventory in retail industries, and be used as health-monitoring devices for personalized care.

TinyML is capable of running machine-learning models on microcontrollers and embedded systems without relying on the cloud or other resources. This is because the models are highly optimized through techniques such as quantization, compression, and pruning, which reduces their size and complexity. While their characteristics are smaller, they are no less powerful for their respective applications.

Some advantages of TinyML include on-device analytics without the need for servers, as data can be processed with low latencies using local hardware. This also means the models are able to run on very little power, such as those from coin-cell batteries. Moreover, they don’t need to rely on an internet connection to function, which means data privacy is increased as all data is stored on the system.

Some companies and institutions have developed frameworks that can take advantage of TinyML models, including Edge Impulse, a development environment designed for edge computing and embedded devices. This also includes TensorFlow Lite, a deep-learning framework that converts models to a particular format that can be optimized, and PyTorch Mobile, an open-source machine-learning framework that can run on mobile devices.

While TinyML is still in its infancy, it has gained in popularity over the last few years as single-board computers (SBCs), embedded systems, and IoT devices have exhibited unprecedented growth in nearly every industry. It’s also given rise to the TinyML Foundation, a professional organization of senior AI experts from 90 companies whose goal is to continue developing the platform for the latest technologies and creating new architectures. It will be interesting to see how TinyML evolves over the next decade and what technologies will take advantage of those developments.