Intel rolled out its latest server chip designed from the ground up for tackling deep learning, as the semiconductor giant steps up its efforts to outflank NVIDIA in a booming data-center market.

The Santa Clara, California-based company said Gaudi2 is twice as fast as its first-generation predecessor, with enhanced memory and networking features that set it apart from NVIDIA’s rival graphics processors. Manufactured by TSMC on the 7-nm node from 16 nm previously, the server chip is purpose-built for training AI models to understand human speech and classify objects in images, among other capabilities.

Gaudi2 is the second-generation chip designed by Habana Labs, the startup that Intel acquired for about $2 billion in 2019, aiming to expand its portfolio of AI chips suited for data centers.

Intel’s AI Pursuits

The Habana-designed chips put more pressure on NVIDIA, which sells graphics processing units (GPUs) that are the current gold standard for training machine learning in data centers. Dominance in the AI market helped NVIDIA overtake Intel as the most valuable chip company in the U.S.

Habana’s Gaudi chips are only one pillar of Intel’s broader strategy to gain ground on NVIDIA in the market for AI chips, said Sandra Rivera, senior vice president and general manager of Intel’s data center and AI group.

Intel dominates the global market for supplying central processing units, the brains behind cloud servers and data centers, and it has upgraded its Xeon server CPUs with AI acceleration. But it is also betting on building a suite of chips including its Altera FPGAs, Habana AI accelerators, and Arc GPUs that can be linked tightly together to bolster performance and reduce power consumed by a wide range of AI workloads.

“AI is driving the data center,” said Eitan Medina, chief operating officer at Habana. “Where Habana fits is when the customer wants to use a server mostly for deep-learning compute.”

Tensor Processing Power-Up

Medina said that while Gaudi2 can tackle AI training faster and with better energy efficiency than its previous chips, it's competing against NVIDIA’s data-center GPUs on another metric—price.

Last year, Amazon Web Services (AWS) rolled out a cloud-computing service based on the first-generation Gaudi, and it claimed that customers would get up to 40% better price-performance than instances based on NVIDIA GPUs. That, in turn, could give AWS the ability to reduce prices for customers renting its servers.

Intel said Gaudi2 brings a significant boost in performance to its battle with NVIDIA for AI chip leadership. The Gaudi2 is based on the same heterogeneous architecture as its predecessor. But upgrading from the 16- to 7-nm node made it possible for Intel to fit in more compute engines, cache, and networking features. "We use that to upgrade all the major subsystems inside the accelerator," Medina said.

Habana’s Gaudi 2 comprises 24 compute engines called Tensor Processing Cores (TPCs) that are based on its very-long instruction word (VLIW) architecture and programmable in C and C++ using a compiler program.

Other improvements include the ability for the TPC cores and Matrix Multiplication Engine (MME) in Habana’s Gaudi2 to run AI workloads using smaller units of data, including the 8-bit floating-point (FP8) format.

Intel plans to roll out Gaudi2—also called HL-2080—on accelerator cards based on the standard OAM form factor.

Ethernet Boost

On top of offering faster processing speeds, Intel said it also upgraded the networking features in Gaudi2.

The Gaudi2 integrates 24 Ethernet ports directly on the die, with each running up to 100 Gb/s of RoCE—RDMA over Converged Ethernet—up from 10 ports of 100 GbE in its first generation.

Integrating RoCE ports into the processor itself gives customers the ability to scale up to systems with thousands of Gaudi2 chips without having to attach separate networking cards, or NICs, to every server in the data center. Intel said this also opens the door for customers to choose Ethernet switches and other networking gear from a wide array of vendors. That, in turn, helps to reduce costs at the system level.

Most of the Ethernet ports are used to communicate with the other Gaudi2 processors in the server. The remainder supply 2.4 TB/s of networking throughput to other Gaudi2 servers in the data center or cluster.

“This brings several advantages,” said Medina. “Reducing the number of components in the system reduces TCO for the end customer. Another one is the ability to use Ethernet as the scaling interface for clusters."

He added, “That means that end customers can avoid using a proprietary interface”—such as NVIDIA’s NVLink GPU-to-GPU interconnect—"which will essentially lock them into a particular technology."

Gaudi2’s memory subsystem also was augmented. According to Intel, the Gaudi2 contains 48 MB of SRAM, which is twice the amount of on-chip memory in its first-generation Gaudi.

The chip uses TSMC’s advanced packaging technology to stack 96 GB of HBM2e directly onto the chip’s package to keep more data on the processor's doorstep, up from 32 GB in its previous generation. Memory bandwidth jumped from 1 TB/s to 2.45 TB/s, said Intel.

Need for AI Speed

Improvements in the various on-chip subsystems come at the expense of higher power consumption. The Gaudi2 accelerator card has a maximum thermal design power (TDP) of 600 W, up from 350 W.

Even though it has a higher power envelope, Intel said the Gaudi2 can still be passively cooled. That means the chips likely consume less power and generate less heat than offerings that need liquid cooling.

A server chip’s energy efficiency is a key requirement for cloud-service providers and technology giants trying to keep their data centers' operating costs in check. Reducing their carbon footprints is also a top priority.

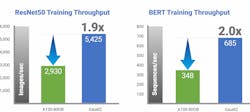

Habana said the Gaudi2 accelerator card will be capable of processing around 5,425 images per second on ResNet-50, a popular AI image-processing model. That translates to 1.9X the throughput of NVIDIA’s A100 GPU on the same process node and with roughly the same die area, which can process 2,930 images per second on Resnet-50. Intel said that Gaudi2 runs the model 3X faster than its first-generation Gaudi.

Habana said Gaudi2 has double the throughput of the A100 with 80 GB of HBM while running the training model for BERT, an advanced AI model that uses deep learning to classify words or predict strings of text.

Intel also launched its second-generation, Habana-designed 7-nm chip for AI inferencing known as Greco.

Habana said its Gaudi2 and Greco accelerator cards both use a single software stack, called Synapse AI, that translates models from TensorFlow and PyTorch to run on Habana’s speedy, energy-efficient AI architecture.

The SynapseAI suite of software supports training models on Gaudi2, said Intel. It also supports running inference on any other system, including Intel’s Xeon CPUs, Greco, and even Gaudi2 itself.

Uphill Battle Remains

While Habana is racking up customer wins like AWS with Gaudi, closing the gap with NVIDIA will be a big challenge. Already far ahead of the competition, NVIDIA last month rolled out its next-generation H100 GPU, based on its new Hopper architecture and due out in the second half of 2022. NVIDIA said the H100 offers 3X higher performance-per-watt than the A100 that Habana compared to Gaudi2.

Habana’s Gaudi2, NVIDIA's H100, and other specialized processors in the AI category have become a fierce battleground in the chip industry. Rising demand for faster, more efficient AI computations has cultivated a wave of new chip startups in recent years, including Cerebras Systems, Graphcore, and Tenstorrent.

But whether Intel can gain ground on NVIDIA and stay ahead of a crowded market increasingly hinges on how customers react to the performance, power efficiency, and cost savings of Habana’s Gaudi2.

“This deep-learning acceleration architecture is fundamentally more efficient and backed with a strong roadmap,” said Habana’s Medina. According to Intel, Gaudi2 should be in servers that ship by the end of the year.