Technology giants and AI startups are burning through vast amounts of power to stay relevant in the AI race, creating new obstacles in the drive to decarbonize the world’s data centers.

Today’s power-hungry AI chips, such as NVIDIA’s “Hopper” GPU, consume up to 700 W, sharply driving up the power requirements for even a single server used to train and run large language models (LLMs) that need the performance. NVIDIA is raising the bar with its “Blackwell” family of GPUs, which will put away up to 1,200 W, creating a huge amount of heat that must be removed with liquid cooling. It’s not out of the realm of possibility that a single server GPU could consume 2 kW or more by the end of the decade.

This elevates the power-per-rack demands in data centers to more than 100 kW, up from 15 to 30 kW per rack at present. Infineon is trying to stay a step ahead with a new roadmap of power-supply units (PSUs) uniquely designed to handle the current and future power demands of server racks packed with AI silicon. Supplying 3 to 12 kW of power overall, these units feature all three of the company’s power semiconductor technologies—silicon (Si), silicon carbide (SiC), and gallium nitride (GaN)—in a single module to boost efficiency and save space.

While not selling the switched-mode power supplies (SMPS) directly to AI and other technology firms, Infineon is instead rolling out the reference designs that use its power FETs, microcontrollers (MCUs), gate-driver ICs, high-voltage isolation, and other ICs. In addition to its current power supplies that can pump out up to 3 kW or 3.3 kW, Infineon is upping the ante with a reference design for a state-of-the-art 8-kW PSU.

This power supply delivers up to 97.5% efficiency. In addition, power density is 100 W per in.³, which is claimed to be 3X more than 3-kW power supply units currently on the market.

SiC and GaN: Power Semiconductors for the Future of AI

The PSU leverages each of Infineon’s power switch technologies in a hybrid architecture, using SiC, GaN, and Si power FETs where they will give you the biggest boost in efficiency and power density.

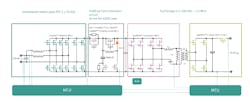

The 8-kW power supply comprises a front-end interleaved, bridgeless totem-pole and a back-end isolated full-bridge LLC—packed into a smaller form-factor than 5.5-kW PSUs based on the Open Compute Project (OCP) standard (Fig. 1).

The totem-pole power factor correction (PFC) uses SiC since it has higher efficiency at high temperatures. One of the advantages of the 650-V SiC MOSFETs is that they have a lower temperature coefficient, which means that the on-resistance (RDS(on)) of the device is less susceptible to heat.

At the heart of the high-frequency full-bridge LLC stage is GaN. The 650-V GaN FET is preferred for its lower capacitance, which enables faster turn-on and turn-off times during switching. Thus, switching frequencies for the LLC stage range from 350 MHz to 1.5 GHz.

Faster switching reduces power losses, which opens the door to the use of smaller capacitors and other passive components as well as transformers and other magnetics, enhancing power density. The silicon MOSFETs are used in the rectification stages in the power supply (in the PFC and the DC-DC converter), where switching losses are less of a factor. These devices make the most sense since they have low RDS(on).

The 8-kW power supply also features full digital control based on Infineon’s MCUs for both the totem-pole PFC and full-bridge LLC stages, paving the way for more accurate and flexible power management. Moreover, the digital control loops help deliver optimal performance under varying load conditions, according to the company. The new unit adds proprietary magnetic devices that play into its high efficiency and power density, too.

One of the other innovations relates to the bulk capacitor in the power supply. A bulk capacitor is a type of energy-storage unit placed close to the input of the power supply. When fully charged, it can supply a safety net of current for the system. The device helps prevent the output of the power supply from falling too far in situations where the input current is disconnected, smoothing out any interruptions in the power-delivery process.

On top of supplying backup power to the server, these bulk capacitors are used to filter and reduce the ripple in the power supply that can happen because of inrush current or other transients in the system.

In the data center, AC-DC power converters are used to deliver a stable voltage to the server housing the CPUs, GPUs, and other AI silicon. In general, it’s designed to cope with a relatively short-term loss of the high-voltage AC entering it. The “hold-up time” is the amount of time a power supply can continue to pump out a stable DC output voltage during a power shortage or shutdown, which may interrupt the AC input voltage.

Any longer than that and the energy stored in the capacitor will be depleted. In turn, the output voltage of the PSU will dip or turn off completely. This can cause the processors in the server to shut down or reset themselves, throwing a wrench into the power-hungry process of AI training. Training the largest AI models can take days at a time—and in many cases, significantly longer than that—so interrupting the process can cost you.

Since it’s directly proportional to the amount of time the power supply can continue delivering power after a failure, increasing the capacitance is a plus. The tradeoff is increased capacitance means using a larger capacitor that will occupy more space in the system and inevitably costs more. The bulk capacitor is already one of the largest components in a PSU, and they tend to be even larger in new high-density power supplies.

Infineon said it solves the problem with the “auxiliary boost circuit” in the PSU. Assembled out of a 600-V superjunction MOSFET and 650-V SiC diode, it extends the time the 8-kW power supply can continue to supply a consistent DC output voltage without using bulky capacitors for intermediate energy storage, saving space and enhancing reliability. The PSU supports a hold-up time of 20 ms at 100% load.

The company said it plans to roll out the full reference design for the new 8-kW power supply in early 2025.

The PSU: One of the Keys to Data-Center Power Delivery

The role of any power supply unit is to convert the mains AC that enters the data center at a higher voltage from the electric grid to regulated DC power at a lower voltage that works for the circuit board in the server.

In this case, the input is single-phase high-line grid (180 to 305 V AC) while the outputs range from 48 to 51 V DC with up to 160 A. A switch-mode power supply (SMPS) such as Infineon’s is one of the building blocks that power encounters before it enters the processors on a PCB (Fig. 2). While the standard DC output for data-center power supply unit used to be 12 V, the technology industry at large is upping the standard to 48 V.

One of the keys to minimizing power loss in a data center is delivering power at higher voltages and then stepping down the voltage as close as possible to what used by the processor cores within AI accelerators or other chips.

Since Ohm’s Law states power is equal to current times voltage (P = I × V), upping the power delivery to 48 V from 12 V means that 4X less current is consumed, reducing excess losses stemming from resistance on the power rails. According to the other form of Ohm’s Law, which states power equals resistance times current squared (P = R × I2), losses decline by 16X. Reducing these losses when distributing power to the server gives you the ability to shrink the busbars and power-carrying wires in servers, driving down costs, too.

While intermediate bus converters (IBCs) and other power electronics can be placed in between it and the server, the PSU tends to be the final step before power enters a circuit board. After it’s inside the server, the power encounters several DC-DC converters that turn down the 12- or 48-V DC input voltages even further—to a fraction of a volt in most cases—to run the processor cores at the heart of the CPUs, GPUs, or other server chips.

Infineon is also working to solve the “last inch” of the AI power-delivery problem in data centers. At APEC 2024, the company rolled out a family of multiphase voltage regulators (VRs) that flank the processors or accelerators in the server. These modules are placed as close to the point-of-load (POL) of the AI silicon as possible, and they can be clustered around the processor to sling more than 2,000 A into it with up to 90% efficiency.

With AI workloads siphoning more and more power in data centers, power electronics companies don't want to fall behind. Further in the future, Infineon plans to roll out a reference design for what it calls “the world's first” 12-kW PSU.

Read more articles in the TechXchanges: Silicon Carbide (SiC), Gallium Nitride (GaN), and Generating AI.