Check out our coverage of the 2023 Flash Memory Summit. This article is also part of the TechXchange: CXL for Memory and More.

PCI Express (PCIe) is the de facto standard peripheral interface. Running on top of it is Compute Express Link (CXL), a new cache-coherent interconnect that aims to become the industry standard for connecting memory, processors, and accelerators that inhabit data centers.

With CXL set to become the dominant CPU interconnect, it opens a new world of possibilities for wiring together the data center. Virtually all major CPU and DRAM vendors are backing the new standard, but it’s still early days for CXL, which has been in development since 2019. Now, a Silicon Valley startup is launching a switch system-on-chip (SoC) to help companies harness the latest capabilities of the specification.

XConn Technologies, which debuted at the 2023 Flash Memory Summit (FMS) this month in Santa Clara, Calif., said it started sampling the industry’s first switch chip to be compatible with the latest CXL 2.0 standard.

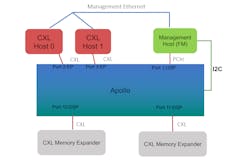

The new SoC, code-named Apollo, “sets a new standard” in flexibility and performance, according to the startup, since it supports both CXL 2.0 and PCIe Gen 5 in a single chip. The switch is designed to reduce port-to-port latency and power consumption per port, lending itself to memory and bandwidth-intense AI workloads in the data centers. It also saves space by requiring the use of a single chip instead of separate CXL and PCIe silicon.

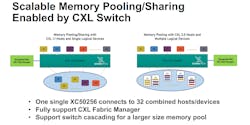

The switch chip, officially called XC50256, is equipped with 32 ports that operate at the same data rate as the PCIe Gen 5 standard—up to 32 GT/s—that can be split into 256 lanes to support up to 2.048 TB/s of bandwidth (Fig. 1).

First Comes Memory Expansion

CXL is a cache-coherent interface between CPUs, DRAM, accelerators, and other peripherals. The base CXL 1.1 standard is focused on memory expansion, which involves the ability to add memory capacity to a system. Instead of using a standard DDR DRAM interface, you can directly attach CXL-compatible memory to a CPU, GPU, or other host processor using a PCIe slot—the same way you would plug in a NVMe SSD.

In the event a system runs out of memory for a given workload, it’s possible to strap additional memory to a spare PCIe interface using CXL. The interconnect is also a boon to high-performance computing (HPC) and AI workloads constrained by a lack of memory bandwidth. Slotting more memory into a system via CXL gives you access to more memory bandwidth than the base system can supply. CXL 1.1 also introduced the concepts of coherent co-processing via accelerator cache and device-host memory sharing.

According to XConn, systems using the Apollo switch can support CXL memory pooling and expansion with existing CXL 1.1 hardware, meaning server processors from the likes of AMD and Intel as well as Ampere and AWS.

Also, systems that take advantage of Apollo will be future-proofed with the upcoming CXL 2.0 standard.

In hybrid mode, the XC50256 supports both CXL and PCIe devices at the same time. This flexibility allows system vendors and other customers to pick and choose the best components when building out a new system.

Then Comes Memory Pooling

Another possibility presented by the CXL standard is memory pooling. CXL-compatible processors and accelerators share a single memory space, with each one being allocated a portion of the memory “pool.”

This functionality was introduced in the CXL 2.0 version of the standard to tackle the tight coupling between CPUs and DRAM, which severely limits the memory capacity and composability of data centers.

With CXL 1.1, CXL-based accelerators such as GPUs or CXL-attached memory could be directly attached to CPUs or other hosts. CXL 2.0 elaborates on this topology, saving a spot in the system for a CXL switch that allows several processors to connect to each other, to accelerators, and to memory in a mix-and-match fashion. With memory pooling, each host processor can access as much memory capacity and bandwidth as it needs from a large shareable memory pool to run a given workload—on demand.

XConn said the XC50256 was designed to play the role of the switch in a CXL 2.0 system. Featuring 32 ports, the switch can connect to 32 host processors and CXL memory devices all at once. Accordingly, the chip supports all of the underlying protocols used by CXL, including the PCIe-based I/O protocol (CXL.io) along with cache-coherent protocols for taking from system memory (CXL.cache) and device memory (CXL.mem).

XC50256 can also be split into several virtual CXL switches so that every host remains isolated from all the other hosts in the system. The switch itself can be configured by a separate fabric manager (FM) (Fig. 2).

AI’s Memory Bottleneck

More memory capacity is needed for the next generation of data centers. But that’s not all. Connectivity has become a severe bottleneck for workloads such as AI that have to sort through vast amounts of data.

As large language models (LLM) and other machine-learning (ML) models become larger and more complicated, data centers are in dire need of more memory capacity and bandwidth. Other hardships facing AI data centers include spilling memory to slow storage when main memory is full, excessive memory copying, I/O to storage, serialization/deserialization, and out-of-memory errors, among others.

While CXL is a potential cure for the memory bottleneck and other challenges in data centers, implementation in silicon matters. For its part, XConn said Apollo is architected purposely for AI, ML, and HPC applications.

According to the startup, Apollo helps “break the bandwidth barrier” by delivering double the number of switch lanes as existing solutions in addition to the reduction in port-to-port latency and power consumption per port.

Apollo is said to be ideal for JBOG (Just-a-Bunch-of-GPUs) and JBOA (Just-a-Bunch-of-Accelerators) processor configurations, as a CPU is rarely enough to handle computationally intense AI workloads by itself.

The Apollo SoC, which is currently in customer sampling, also supports switch cascading for a larger memory pool, said JP Jiang, VP of Product Marketing and Business Operation. Mass production of the switch is slated for the second quarter of 2024.

The startup is facing an uphill battle to break into the lucrative data-center market. But the company, which was founded in 2020, is already working closely with big names in the memory-chip business.

It has partnered with Samsung Electronics and Memverge to create a proof-of-concept pooled CXL memory system with 2 TB of capacity. The system uses XConn’s switch to connect to up to eight hosts that can dynamically access CXL memory when they need it. It’s designed to address the limitations in memory capacity and composability in data centers, which are increasingly being purpose-built for AI.

"Modern AI applications require a new memory-centric data infrastructure that can meet the performance and cost requirements of its data pipeline," said MemVerge CEO Charles Fan.

Check out more of our coverage of the 2023 Flash Memory Summit, and more articles in the TechXchange: CXL for Memory and More.