The latest high-performance CPU and GPU cores from Arm come with a set of blueprints for building them into high-end mobile chips. It represents the company’s bid to bring more advanced AI models to Android smartphones and Windows laptops.

At the top of the heap is Arm’s Cortex-X925, which brings a double-digit uplift in instructions per clock (IPC) along with the ability to run at faster clock frequencies and a larger L2 cache. It’s folding the CPU core into a broader set of blueprints called Arm Compute Subsystems (CSS) for Client that also unites its latest Cortex-A cores—the A720 and A520—and Immortalis GPU—the G925—in a package ready to be taped out. Arm said customers can use CSS for Client it to build high-end mobile silicon faster and more easily.

“We are now delivering physical implementations across Arm’s CPU and GPU, making it easier to build and deploy Arm-based solutions and leaving nothing to chance,” said Chris Bergey, Arm’s SVP and GM of client, in a briefing.

Arm said CSS for Client is designed to wring the best performance and power efficiency out of its CPU cores so that they can run more advanced AI models by themselves, including large language models (LLMs) such as Google’s Gemini and Meta’s Llama, on high-end smartphones and other devices without using the cloud. The CPU cores are designed to be plugged into chips manufactured on the 3-nm process, noted Bergey.

On top of smartphones, Arm is trying to transplant its instruction set architecture (ISA) and off-the-shelf CPU cores based on it into other areas, including PCs. According to the company, CSS for Client is also a fit for AI PCs that can run AI-powered features from real-time translation to digital assistants on the device directly.

Arm said the combination of the X925, A725, and A520 cores marks its most powerful mobile CPU cluster for AI to date. It brings 46% better AI performance than CPU clusters based on the Cortex-X4 released last year.

The world’s largest vendor of semiconductor IP is also trying to close the gap in performance with custom-designed Arm-based CPU cores from the likes of Apple and Qualcomm, which are winning out in laptops after decades of dominance by Intel and AMD. Instead of tapping off-the-shelf CPU cores from Arm, Apple uses Arm’s architecture as a base for building the M-series chips at the heart of its Mac computers and iPads.

Microsoft recently unveiled a new generation of Surface laptops and Pro tablets powered by Qualcomm’s Snapdragon X chips that make heavy use of Arm CPU cores custom designed by Qualcomm’s Nuvia unit.

Arm’s Solution to Soaring SoC Complexity

Arm said CSS for Client is intended to reduce the difficulty of designing a modern system-on-chip (SoC).

The company’s core business model is licensing CPUs, GPUs, and many other building blocks of IP to firms that bundle these blueprints along with any custom logic they develop into a microprocessor.

However, between choosing the best CPU cores from Arm, configuring them into a complete CPU subsystem along with memory and IO, integrating them with in-house and third-party building blocks such as GPUs, physically arranging it on the processor’s die, validating and verifying that it all works within the context of the chip design, fine-tuning the full package for the process node that they intend to use, and then working with a semiconductor foundry to build the final chip—it all adds up to a huge challenge for chip designers.

The CSS for Client is a pre-packaged set of CPU cores paired with several other pieces of IP, including Arm’s new mobile GPU, the G925. Other parts of the package include its CoreLink system interconnects and system memory management units (SMMUs) to bind the CPU and GPU cores together as well as improve the delivery of data to and from memory and with other parts of the SoC.

Arm said it takes care of many of the more undifferentiated facets of designing a mobile CPU before supplying a cluster of cores that are all configured, verified, validated, and tested for optimal performance, power, and area (PPA).

The upshot is that it no longer supplies the blueprints for CPU cores and other IP and then forces its customers to figure everything out from that point. Arm said CSS for Client is a physical implementation that can be dropped into mobile SoCs, making it easier and faster for companies to build high-end Arm-compatible silicon and optimize it for power and performance. They only need to adjust the amount of memory and IO in the processor and add any desired on-chip accelerators.

Arm already supplies these pre-verified and pre-validated sets of off-the-shelf CPU cores to companies building chips for data centers. But this is the first time it’s brought them to the client market.

The Next Generation of Arm’s Mobile CPU Cores

At the heart of the subsystem is the most advanced mobile CPU core in the lineup: the Cortex-X925.

The “Blackhawk” core is engineered to be manufactured on 3-nm nodes, which will increase the density of logic underpinning it. The CPU runs at a peak clock frequency of 3.8 GHz, up from 3.4 GHz in Cortex-X4.

Though based on the Armv9.2 architecture, the innovations inside the Cortex-X925 stem from its microarchitecture. Arm said it upgraded the CPU with a wider out-of-order (OOO) execution unit that can decode more instructions per cycle and accurately anticipate the next instruction in a program. It widened the vector processing pipeline in the CPU, too. Thus, it can output 50% more TOPS—trillions of operations per second—one of the most widely used metrics for measuring the speed of AI chips.

The X925 can be coupled with up to 3 MB of L2 cache per core, up from 2 MB previously. On-chip memory keeps regularly used instructions close by to save time and keeps data feeding into and out of the CPU.

These along with a wide range of other innovations lead to a CPU core that can supply 36% more single-threaded performance than the Cortex-X4 at the heart of the flagship 4-nm smartphone chips available today.

James McNiven, Arm’s VP of Product Management for Client, said it brings a 46% boost in performance on AI workloads—specifically, when measuring a metric called time to first token. This is the time it takes for the LLM inside the device—in this case, Microsoft’s Phi model—to evaluate a question and return the first word of a response. The latency tends to be limited by computing power.

While the Cortex-X925 runs the show in terms of performance, it’s relatively power-hungry and dissipates extra heat, which takes a toll on battery life. These tradeoffs pose a problem since most of the applications for the CPU are in smartphones and PCs. To balance things out, Arm plans to plug it into heterogeneous architectures that mix and match different sized CPU cores with different performance and power profiles.

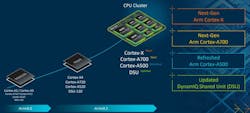

Arm said upgrades were made to the Cortex-A725, which it calls the workhorse for CPU workloads in mobile devices. Specifically designed to sustain higher clock speeds, it’s estimated to boost performance per watt by 35% compared to the A720. Updates were also made to the Cortex-A520 to consume 15% less power. Both are built on the same Armv9.2 architecture as the X925, so applications are indifferent to what core they run on.

Traditionally, these would act as the “big” and “little” CPUs in Arm’s big.LITTLE architecture, which has long been the gold standard in smartphones. In these situations, the "big" CPU cores such as the A725 handle most of the hefty computations in the SoC, while “little” cores like the A520, which are focused on high power efficiency, take care of lighter workloads and background tasks without dissipating power. The larger Cortex-X cores add another dimension to the architecture by managing more “bursty” workloads.

All three CPU cores are glued together in clusters with Arm’s DynamIQ Shared Unit (DSU). The updated DSU-120 unites up to 14 CPU cores per cluster. It adds several new power modes that reduce power by 50% for most workloads, prolonging the battery life of consumer devices. Arm said the DSU-120 can also reduce the power wasted across the CPU cluster due to cache misses by 60%.

“Our partners can take this range of different CPU and GPU options to carefully craft their own solutions across large-screen compute and smartphones all the way to [televisions] and wearables,” said McNiven.

Kleidi: The Software Tools That Binds AI to Arm

The semiconductor IP giant is also rolling out a new family of software libraries called Arm “Kleidi.” The libraries can be embedded into any software platform to pull even more performance out of its CPUs for AI and other jobs.

One of the first software libraries in the Kleidi lineup is focused on AI workloads. It encompasses a set of computational kernels that can be folded into different AI frameworks, including general-purpose software tools such as TensorFlow and PyTorch that can be used to run any neural network on any device. Arm said they can even be embedded in AI models—for instance, Meta’s Llama and Microsoft’s Phi—before they’re placed in larger applications. Another new Kleidi library will target computer vision.

As a result, the software can tap into Scalable Vector Extensions (SVE), Scalable Matrix Extensions (SME), and other instructions in the Armv9.2 architecture that are leaned on for AI inferencing on Arm CPUs.

While neural processing units (NPUs) are becoming core building blocks in virtually every mobile chip, Kleidi is focused on the CPU, which is where most AI workloads run in smartphones and laptops. “They're built for the CPU first, and for many of them, that's where they stay,” said Geraint North, VP of Developer Platforms at Arm, adding that the “CPU is the only computer engine that you can guarantee will be able to run the networks of tomorrow.”

Regarding Kleidi, he added, “They're small, highly optimized kernels that are designed to be integrated wherever AI or computer vision is being done and guarantee developers the best possible performance no matter what Arm they run on.”

MediaTek said it plans to use the latest X925 and A725 CPU cores in its future Dimensity 9400 family of chips for Android smartphones, due out by the end of the year.

Read more articles in the TechXchange: TinyML: Machine Learning for Small Platforms.